JWL // literature

WrittenInterviewCanonical---12MAR2025---JWL.pdf

Yes, this is a job application also crafted as a kind of research artefact that can immediately be utilized in research as well as be cited. However, official citation details shall follow later.

JWL // literature

WrittenInterviewCanonical---12MAR2025---JWL.pdf

Currently one might cite this as such:

@article{lutalo-2025,

author = {Lutalo, Joseph Willrich},

journal = {mak},

month = {3},

title = {{Written Interview for Python Software Engineer, Commercial Systems position at Canonical}},

year = {2025},

url = {https://www.academia.edu/resource/work/128155827},

}

Blackboard Computing Adventures 💡

---[INTRO]: Today's review concerns an ACM SLE paper from 2019. The paper, titled ❝From DSL Specification to Interactive Computer Programming Environment❞ introduces us to the helpful idea of auto-generated modern advanced IDEs for especially textual interpretable…

We continue with our ACM SLE research reviews below 🌐⚡👇🏻👇🏻

Blackboard Computing Adventures 💡

We continue with our ACM SLE research reviews below 🌐⚡👇🏻👇🏻

---[INTRO]:

Today's review concerns a 2004 ACM paper published as part of the "ACM Transactions on Programming Languages and Systems". The paper, co-authored by 5 people from the popular, traditional American telephony company AT&T, is titled ❝Hancock: A Language For Analyzing Transactional Data Streams❞ and delves into the language that was designed and first implemented at that company[1] for the purpose of helping in-house analysts of especially gigantic call-data streams perform their tasks more efficiently.

Today's review concerns a 2004 ACM paper published as part of the "ACM Transactions on Programming Languages and Systems". The paper, co-authored by 5 people from the popular, traditional American telephony company AT&T, is titled ❝Hancock: A Language For Analyzing Transactional Data Streams❞ and delves into the language that was designed and first implemented at that company[1] for the purpose of helping in-house analysts of especially gigantic call-data streams perform their tasks more efficiently.

Blackboard Computing Adventures 💡

Photo

---[BRIEF BIO]:

The lead author, Professor Corinna Cortes is a prominent researcher originally from AT&T Bell Labs, where she contributed significantly to machine learning, particularly in the development of Support Vector Machines (SVMs), a widely used algorithm in the field, and for which work, she received the Paris Kanellakis Theory and Practice Award in 2008. She later joined Google Research as a Vice President, continuing her work in theoretical and applied machine learning[2][3][4].

The lead author, Professor Corinna Cortes is a prominent researcher originally from AT&T Bell Labs, where she contributed significantly to machine learning, particularly in the development of Support Vector Machines (SVMs), a widely used algorithm in the field, and for which work, she received the Paris Kanellakis Theory and Practice Award in 2008. She later joined Google Research as a Vice President, continuing her work in theoretical and applied machine learning[2][3][4].

Blackboard Computing Adventures 💡

Photo

The second author, Kathleen Fisher is a computer scientist specializing in programming languages and cybersecurity. She is an adjunct professor at Tufts University and has held leadership roles, including as Director of DARPA's Information Innovation Office, where she managed programs like HACMS (High-Assurance Cyber Military Systems) and PPAML (Probabilistic Programming for Advancing Machine Learning). Before these roles, she was a Principal Member of the Technical Staff at AT&T Labs Research, where she contributed to advancements in programming languages and domain-specific languages, such as the Hancock system for stream processing and the PADS system for managing ad hoc data[5][6].

The rest were definitely also prominent AT&T Bell Labs researchers.

The rest were definitely also prominent AT&T Bell Labs researchers.

Blackboard Computing Adventures 💡

Photo

---[ABOUT PAPER]:

The core idea in this paper is to take a task that was originally conducted using the mainstream GPL C; particularly, the systematic, purpose-oriented interception and routine analysis of in-transit large telephony data, and conduct this work using a language technology known as "Hancock", specifically designed for the purpose --- by a team of 2 researchers and their 2 assistants at AT&T[1].

The paper[1] begins by giving us the motivations for this formidable undertaking. We get to learn that the analysts essentially were faced with the daunting task of regularly extracting useful patterns and insights from gigabytes of telephony data streams in hourly and daily batches; 100s of millions of calls involving 10s of millions of distinct Mobile Phone Numbers (MPNs) for example, and it might sometimes be required to look at the pattern/signature associated with a particular MPN in a matter of seconds via a web-browser interface.

That originally, such tasks were conducted using in-house software implemented using especially C and/or UNIX shell programs. However, that over time, evolving such programs, as new patterns (also referred to as "Signatures") needed to be catered for, presented difficulties since the C programs were generally unwieldy and near impossible to tweak without introducing errors. Thus was a new DSL for the task envisioned, designed and then implemented at AT&T.

Hancock was designed with the high-performance benefits of C in mind as well as its familiarity to the analysts that had already used it successfully for the said tasks before the new language came to life. In fact, Hancock was designed and then implemented as an extension of the C-language. We see that it adopts not just its performant, low-level nature that's somewhat as close as possible to the target machine architectures, but via careful abstractions, its syntax likewise somewhat makes programming in Hancock easier for those already familiar with C.

Hancock compiles to C source-code, which then combined with the C-implemented Hancock runtime, is then used to generate machine-native executable binaries.

Further, we learn that, despite having originally been motivated by problems in telephony, yet, the language has successfully been applied for other kinds of data sources; WiFi, calling-card records, TCPdumps and more. In fact, it is somewhat related to the pattern-action character of older data processing languages such as AWK, though, it is argued that it would be overkill for use in such lightweight tasks as processing mere text-files.

Also, and interestingly, the paper builds on/relates to ideas first presented in a 1975 paper on COBOL by a one "Michael Jackson"! It's also related to the data stream processing capabilities of alternative technologies such as "Aurora" and "Telegraph"[1].

So, overall, this is a terrific paper on the matter of core technologies and methods employed in network-based espionage and transaction-fingerprinting --- stuff that reminds one of crime analysis and/or prevention (work the reviewer has previously worked on himself[7]). It also is a great example paper on how to report original research concerning a new DSL.

---[CRITICISM of PAPER]:

I expected to find an example of the kind of raw data that such programs as are discussed in this paper actually deal with (even if just a snippet or a much simplified example), but didn't find any.

Apart from discussion of and demonstration of Hancock's use in telephony contexts, scanty examples (esp. concerning illustrative code) or discussion happens on how to use the language for other similar, but non-Telco tasks. For example, I feel that Hancock in a way closely relates to popular hacker/network analysis tools such as "WireShark"; which can be used to process raw ASCII or binary dumps of network streams, however such a comparison is likewise missing.

---[REFS]:

The core idea in this paper is to take a task that was originally conducted using the mainstream GPL C; particularly, the systematic, purpose-oriented interception and routine analysis of in-transit large telephony data, and conduct this work using a language technology known as "Hancock", specifically designed for the purpose --- by a team of 2 researchers and their 2 assistants at AT&T[1].

The paper[1] begins by giving us the motivations for this formidable undertaking. We get to learn that the analysts essentially were faced with the daunting task of regularly extracting useful patterns and insights from gigabytes of telephony data streams in hourly and daily batches; 100s of millions of calls involving 10s of millions of distinct Mobile Phone Numbers (MPNs) for example, and it might sometimes be required to look at the pattern/signature associated with a particular MPN in a matter of seconds via a web-browser interface.

That originally, such tasks were conducted using in-house software implemented using especially C and/or UNIX shell programs. However, that over time, evolving such programs, as new patterns (also referred to as "Signatures") needed to be catered for, presented difficulties since the C programs were generally unwieldy and near impossible to tweak without introducing errors. Thus was a new DSL for the task envisioned, designed and then implemented at AT&T.

Hancock was designed with the high-performance benefits of C in mind as well as its familiarity to the analysts that had already used it successfully for the said tasks before the new language came to life. In fact, Hancock was designed and then implemented as an extension of the C-language. We see that it adopts not just its performant, low-level nature that's somewhat as close as possible to the target machine architectures, but via careful abstractions, its syntax likewise somewhat makes programming in Hancock easier for those already familiar with C.

Hancock compiles to C source-code, which then combined with the C-implemented Hancock runtime, is then used to generate machine-native executable binaries.

Further, we learn that, despite having originally been motivated by problems in telephony, yet, the language has successfully been applied for other kinds of data sources; WiFi, calling-card records, TCPdumps and more. In fact, it is somewhat related to the pattern-action character of older data processing languages such as AWK, though, it is argued that it would be overkill for use in such lightweight tasks as processing mere text-files.

Also, and interestingly, the paper builds on/relates to ideas first presented in a 1975 paper on COBOL by a one "Michael Jackson"! It's also related to the data stream processing capabilities of alternative technologies such as "Aurora" and "Telegraph"[1].

So, overall, this is a terrific paper on the matter of core technologies and methods employed in network-based espionage and transaction-fingerprinting --- stuff that reminds one of crime analysis and/or prevention (work the reviewer has previously worked on himself[7]). It also is a great example paper on how to report original research concerning a new DSL.

---[CRITICISM of PAPER]:

I expected to find an example of the kind of raw data that such programs as are discussed in this paper actually deal with (even if just a snippet or a much simplified example), but didn't find any.

Apart from discussion of and demonstration of Hancock's use in telephony contexts, scanty examples (esp. concerning illustrative code) or discussion happens on how to use the language for other similar, but non-Telco tasks. For example, I feel that Hancock in a way closely relates to popular hacker/network analysis tools such as "WireShark"; which can be used to process raw ASCII or binary dumps of network streams, however such a comparison is likewise missing.

---[REFS]:

research.google

Corinna Cortes

Blackboard Computing Adventures 💡

Photo

1. Cortes, Corinna, Kathleen Fisher, Daryl Pregibon, Anne Rogers, and Frederick Smith. "Hancock: A language for analyzing transactional data streams." ACM Transactions on Programming Languages and Systems (TOPLAS) 26, no. 2 (2004): 301-338.

2. https://research.google/people/author121/

3. https://en.wikipedia.org/wiki/Corinna_Cortes

4. https://www.millennia2025-foundation.org/wa_files/cv_corinna_cortes.pdf

5. https://www.cs.tufts.edu/~kfisher/Kathleen_Fisher/Home.html

6. https://conf.researchr.org/profile/conf/kathleenfisher

7. Lutalo, J. W. 2025. Written Interview for Python Software Engineer, Commercial Systems position at Canonical. Mak. https://www.academia.edu/resource/work/128155827.

#review #notes #acm #sle #jwl #phd

2. https://research.google/people/author121/

3. https://en.wikipedia.org/wiki/Corinna_Cortes

4. https://www.millennia2025-foundation.org/wa_files/cv_corinna_cortes.pdf

5. https://www.cs.tufts.edu/~kfisher/Kathleen_Fisher/Home.html

6. https://conf.researchr.org/profile/conf/kathleenfisher

7. Lutalo, J. W. 2025. Written Interview for Python Software Engineer, Commercial Systems position at Canonical. Mak. https://www.academia.edu/resource/work/128155827.

#review #notes #acm #sle #jwl #phd

research.google

Corinna Cortes

JWL // literature

WrittenInterviewCanonical---12MAR2025---JWL.pdf

WrittenInterviewCanonical---18MAR2025---JWL.pdf

58.5 MB

UPDATED version of the JWL Canonical Paper [18 MAR, 2025 edtn]

Summarizing The on-going Cutting-edge Research happening at the Nuchwezi Research Lab where [fut. Prof] JWL is exploring between languages, intelligence and abstract machines. We try to visualise the important terms in native languages as well. For God and My Country ✨✨🇺🇬✓

Blackboard Computing Adventures 💡

Photo

Meanwhile, around this time two years ago, a certain idea concerning a meta-theory in the psychology and cognition of intelligent and self-aware beings (artificial, natural or spiritual) occurred to me. However, I'm yet to revisit the core idea and develop it further. Could contribute to some formidable future work/research.

--- Joseph W. Lutalo @ Nuchwezi Research

#ideas #basic #theory #artificialintelligence #cognition #psychology #philosophy #phd #jwl

--- Joseph W. Lutalo @ Nuchwezi Research

#ideas #basic #theory #artificialintelligence #cognition #psychology #philosophy #phd #jwl

Blackboard Computing Adventures 💡

Photo

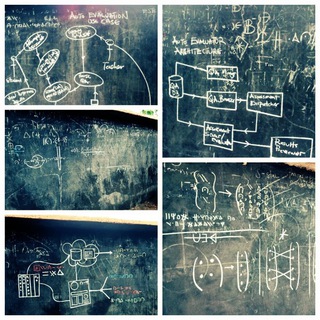

🤦🏻♂️🤣👏🏻😆😆 details concerning our research on RNGs to follow later...

Blackboard Computing Adventures 💡

🤦🏻♂️🤣👏🏻😆😆 details concerning our research on RNGs to follow later...

Media is too big

VIEW IN TELEGRAM

In this Mini-Lecture, Joseph takes us on a brief tour of some interesting ideas he's come up with concerning his quest to develop a new mathematical theory for the random generation of numbers. He also illustrates this background with useful symbolic depiction of how geometry and number theoretic operations could come together to allow for K-System level RNGs such as can form the basis of many important applications in physics, the bio-computational sciences, software engineering and more. This is just one of many such voluntary lectures by Joseph from his Community serving BC-Lecture Series (esp. hosted on Telegram) on YouTube: Mini-Lectures:

https://YouTube.com/@1JWL

https://www.youtube.com/playlist?list=PL9nqA7nxEPgv_CqHOxIDj2Jf1gHAEV-B-

or best view mode: all266.com/mlectures

LINK TO ACTUAL LECTURE:

1. https://youtu.be/DT2xaEghuRQ?feature=shared

2. https://t.me/bclectures/584

#research #advances #fundamentals #numbers #rngs #cryptography #computing #jwl #phd #nuchwezi #minilectures

https://YouTube.com/@1JWL

https://www.youtube.com/playlist?list=PL9nqA7nxEPgv_CqHOxIDj2Jf1gHAEV-B-

or best view mode: all266.com/mlectures

LINK TO ACTUAL LECTURE:

1. https://youtu.be/DT2xaEghuRQ?feature=shared

2. https://t.me/bclectures/584

#research #advances #fundamentals #numbers #rngs #cryptography #computing #jwl #phd #nuchwezi #minilectures

Blackboard Computing Adventures 💡

In this Mini-Lecture, Joseph takes us on a brief tour of some interesting ideas he's come up with concerning his quest to develop a new mathematical theory for the random generation of numbers. He also illustrates this background with useful symbolic depiction…

So-called enablers of Artificial Intelligence, and the "Spirit in the Machine" aka. RNGs 🤞🔆