Stable, chronic in-vivo recordings from a fully wireless subdural-contained 65,536-electrode brain-computer interface device

https://www.biorxiv.org/content/10.1101/2024.05.17.594333v1

https://www.biorxiv.org/content/10.1101/2024.05.17.594333v1

bioRxiv

Stable, chronic in-vivo recordings from a fully wireless subdural-contained 65,536-electrode brain-computer interface device

Minimally invasive, high-bandwidth brain-computer-interface (BCI) devices can revolutionize human applications. With orders-of-magnitude improvements in volumetric efficiency over other BCI technologies, we developed a 50-μm-thick, mechanically flexible micro…

❤1👍1

Neuralink Compression Challenge

"Compression is essential: N1 implant generates ~200Mbps of eletrode data (1024 electrodes @ 20kHz, 10b resolution) and can transmit ~1Mbps wirelessly.

So > 200x compression is needed.

Compression must run in real time (< 1ms) at low power (< 10mW, including radio)."

https://content.neuralink.com/compression-challenge/README.html

"Compression is essential: N1 implant generates ~200Mbps of eletrode data (1024 electrodes @ 20kHz, 10b resolution) and can transmit ~1Mbps wirelessly.

So > 200x compression is needed.

Compression must run in real time (< 1ms) at low power (< 10mW, including radio)."

https://content.neuralink.com/compression-challenge/README.html

❤2👍2

Forwarded from NeuroIDSS

here search on arxiv about 'increase working memory using EEG neurofeedback' then on base of timeflux yaml generated json with modifications from science article

https://github.com/neuroidss/create_function_chat

https://github.com/neuroidss/create_function_chat

❤1

Brain-JEPA: Brain Dynamics Foundation Model with Gradient Positioning and Spatiotemporal Masking

https://www.arxiv.org/abs/2409.19407

https://github.com/Eric-LRL/Brain-JEPA

https://www.arxiv.org/abs/2409.19407

https://github.com/Eric-LRL/Brain-JEPA

arXiv.org

Brain-JEPA: Brain Dynamics Foundation Model with Gradient...

We introduce Brain-JEPA, a brain dynamics foundation model with the Joint-Embedding Predictive Architecture (JEPA). This pioneering model achieves state-of-the-art performance in demographic...

❤1

Forwarded from Brainstart & Центр биоэлектрических интерфейсов, НИУ ВШЭ

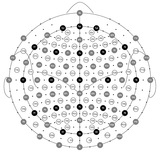

Друзья, рады поделиться отличной новостью! 🎉 Мы завершили важное исследование, посвящённое анализу сенсомоторного ритма с использованием перспективной технологии магнитоэнцефалографии на основе сенсоров с оптической накачкой (OPM-MEG), результаты уже доступны на платформе arXiv в статье Low count of optically pumped magnetometers furnishes a reliable real-time access to sensorimotor rhythm.

В работе мы рассмотрели реализацию интерфейса "мозг-компьютер" на основе моторного воображения и продемонстрировали потенциал OPM-сенсоров для управления внешними устройствами в реальном времени.

🔗 Ознакомиться с нашей статьёй можно здесь: https://doi.org/10.48550/arXiv.2412.18353

🧐 Подискутировать можно здесь:

https://www.alphaxiv.org/abs/2412.18353

📩 Будем рады вашим вопросам, комментариям и обсуждениям!

Спасибо, что следите за нашими проектами и поддерживаете нас! 🙌

В работе мы рассмотрели реализацию интерфейса "мозг-компьютер" на основе моторного воображения и продемонстрировали потенциал OPM-сенсоров для управления внешними устройствами в реальном времени.

🔗 Ознакомиться с нашей статьёй можно здесь: https://doi.org/10.48550/arXiv.2412.18353

🧐 Подискутировать можно здесь:

https://www.alphaxiv.org/abs/2412.18353

📩 Будем рады вашим вопросам, комментариям и обсуждениям!

Спасибо, что следите за нашими проектами и поддерживаете нас! 🙌

arXiv.org

Low count of optically pumped magnetometers furnishes a reliable...

This study presents an analysis of sensorimotor rhythms using an advanced, optically-pumped magnetoencephalography (OPM-MEG) system - a novel and rapidly developing technology. We conducted...

👍1

Decoding semantics from natural speech using human intracranial EEG

https://doi.org/10.1101/2025.02.10.637051

https://doi.org/10.1101/2025.02.10.637051

https://doi.org/10.48550/arXiv.2508.10409

AnalogSeeker: An Open-source Foundation Language Model for Analog Circuit Design

In this paper, we propose AnalogSeeker, an effort toward an open-source foundation language model for analog circuit design, with the aim of integrating domain knowledge and giving design assistance. To overcome the scarcity of data in this field, we employ a corpus collection strategy based on the domain knowledge framework of analog circuits. High-quality, accessible textbooks across relevant subfields are systematically curated and cleaned into a textual domain corpus. To address the complexity of knowledge of analog circuits, we introduce a granular domain knowledge distillation method. Raw, unlabeled domain corpus is decomposed into typical, granular learning nodes, where a multi-agent framework distills implicit knowledge embedded in unstructured text into question-answer data pairs with detailed reasoning processes, yielding a fine-grained, learnable dataset for fine-tuning. To address the unexplored challenges in training analog circuit foundation models, we explore and share our training methods through both theoretical analysis and experimental validation. We finally establish a fine-tuning-centric training paradigm, customizing and implementing a neighborhood self-constrained supervised fine-tuning algorithm. This approach enhances training outcomes by constraining the perturbation magnitude between the model's output distributions before and after training. In practice, we train the Qwen2.5-32B-Instruct model to obtain AnalogSeeker, which achieves 85.04% accuracy on AMSBench-TQA, the analog circuit knowledge evaluation benchmark, with a 15.67% point improvement over the original model and is competitive with mainstream commercial models. Furthermore, AnalogSeeker also shows effectiveness in the downstream operational amplifier design task. AnalogSeeker is open-sourced at this https://huggingface.co/analogllm/analogseeker URL for research use.

AnalogSeeker: An Open-source Foundation Language Model for Analog Circuit Design

In this paper, we propose AnalogSeeker, an effort toward an open-source foundation language model for analog circuit design, with the aim of integrating domain knowledge and giving design assistance. To overcome the scarcity of data in this field, we employ a corpus collection strategy based on the domain knowledge framework of analog circuits. High-quality, accessible textbooks across relevant subfields are systematically curated and cleaned into a textual domain corpus. To address the complexity of knowledge of analog circuits, we introduce a granular domain knowledge distillation method. Raw, unlabeled domain corpus is decomposed into typical, granular learning nodes, where a multi-agent framework distills implicit knowledge embedded in unstructured text into question-answer data pairs with detailed reasoning processes, yielding a fine-grained, learnable dataset for fine-tuning. To address the unexplored challenges in training analog circuit foundation models, we explore and share our training methods through both theoretical analysis and experimental validation. We finally establish a fine-tuning-centric training paradigm, customizing and implementing a neighborhood self-constrained supervised fine-tuning algorithm. This approach enhances training outcomes by constraining the perturbation magnitude between the model's output distributions before and after training. In practice, we train the Qwen2.5-32B-Instruct model to obtain AnalogSeeker, which achieves 85.04% accuracy on AMSBench-TQA, the analog circuit knowledge evaluation benchmark, with a 15.67% point improvement over the original model and is competitive with mainstream commercial models. Furthermore, AnalogSeeker also shows effectiveness in the downstream operational amplifier design task. AnalogSeeker is open-sourced at this https://huggingface.co/analogllm/analogseeker URL for research use.

arXiv.org

AnalogSeeker: An Open-source Foundation Language Model for Analog...

In this paper, we propose AnalogSeeker, an effort toward an open-source foundation language model for analog circuit design, with the aim of integrating domain knowledge and giving design...